LinkedIn’s New Terms — You can opt out of your data being used for AI training

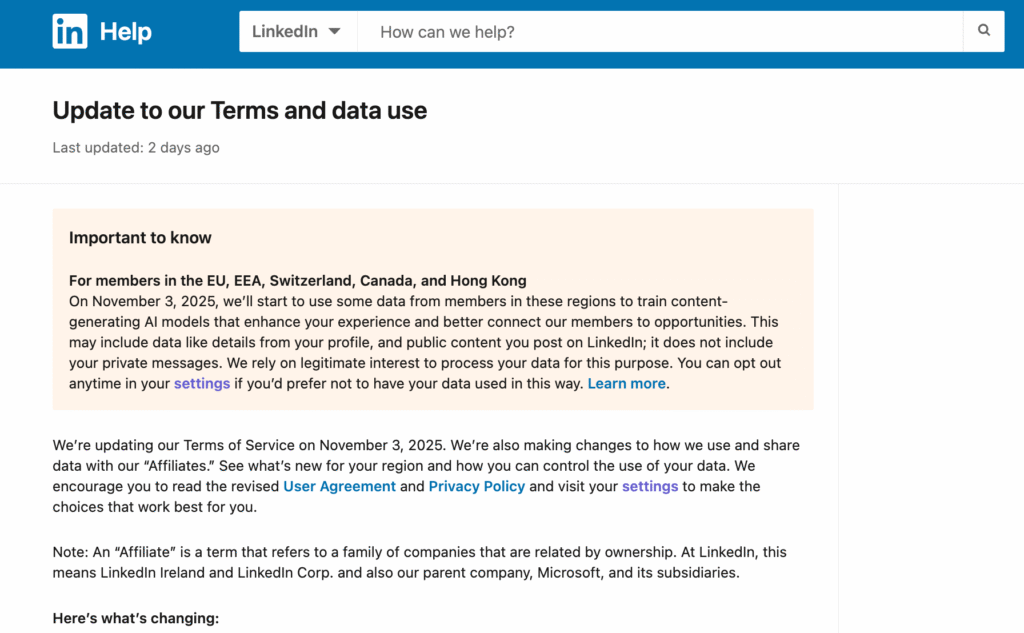

“Beginning November 3, 2025, we’ll start using some data from members in certain regions to train content-generating AI models … You will have the option to opt out.” — LinkedIn Help, “Update to our Terms and data use”

If you’re active on LinkedIn — posting articles, sharing insights, engaging with others’ content — here’s a vital policy change you should know about: starting November 3, 2025, LinkedIn will by default begin using certain member data and content to train generative AI models (in many regions). But here’s the good news: LinkedIn is also giving you the ability to opt out of that usage.

In this article I’ll walk you through:

- What the change is, exactly (and how it differs by region)

- What kinds of data or content LinkedIn intends to use

- What opting out means — and what it doesn’t

- How to opt out (step by step)

- The trade-offs, controversies, and implications

- What you can do now to protect your content and privacy

By the end, you’ll have the clarity and practical steps to manage your LinkedIn presence under this new regime.

1. What’s Changing — And Why It Matters

What LinkedIn is doing now

LinkedIn is updating its Terms of Service and Privacy Policy effective November 3, 2025, to allow (in many regions) the use of member data and content to train “content-generating AI models.” In other words: your profile, posts, and public interactions may be used as training data to help power LinkedIn’s AI features (e.g. content suggestions, rewrites, message drafting, etc.).

The default setting will be opt-in(i.e. turned “on”), so unless you actively opt out, your data will feed those models.

Previously, LinkedIn had been more restrained — some regions (notably parts of Europe) were excluded or under limited regimes. But this new update expands and formalizes that usage.

Additionally, LinkedIn will share (in many regions) more of your data with Microsoft and its affiliates to support more personalized advertising.

Where this takes effect (regions) — and where not (yet)

The regions immediately affected include:

- The UK

- Europe (EEA)

- Switzerland

- Canada

- Hong Kong

- Some other non-U.S. regions

The U.S. was largely excluded in LinkedIn’s announcement (at least initially) — meaning U.S. users may not see the same scope or have the same settings yet. (However, that doesn’t mean the U.S. is permanently excluded — policies can evolve.)

LinkedIn’s own Help pages confirm: it will update its “Update to our Terms and data use” page with region-specific details. LinkedIn Help Page

2. What Data or Content Is Affected?

When a platform says it will “use data to train AI,” that phrase covers a wide range of possible inputs. LinkedIn has offered some clarity. Here’s a breakdown:

What is included

According to LinkedIn’s FAQs and support pages:

- Profile information (e.g., name, job title, work history, education, skills, endorsements)

- Content you post publicly — posts, articles, comments, poll responses, etc.

- Public activity — e.g. your interactions, feed engagement (likes, shares, comments)

- Feedback, ratings, and responses you provide.

LinkedIn states that private messages and certain sensitive personal or payment data are excludedfrom this training usage.

Also, importantly: the usage is for “content-generating AI”models (i.e. generative AI) — that is, models that produce text (drafting, rewriting, suggestions) — not necessarily for non-content models (e.g. security, anti-abuse). For those other uses, LinkedIn may require separate objection channels.

What isn’t included (or is exempt)

- Private messages (DMs, InMail) are excluded from generative AI training usage.

- Data you have restricted in your settings (e.g. if you’ve set your profile visibility limits) likely won’t be used beyond those restrictions.

- Data shared before opting out — turning off the setting only affects future data usage (i.e. you can’t retroactively remove data already used).

- LinkedIn says it will not use data of members under 18 for content-generative AI training.

3. What Does “Opting Out” Mean — And What It Doesn’t

What opting out does

- When you opt out (toggle off the relevant setting), LinkedIn and its affiliates will **no longer use your futuredata or content to train content-generating AI models.

- It also prevents sharing with Microsoft affiliates (for AI usage) to the extent that the setting allows.

- It gives you control over whether your posts or profile updates (going forward) become part of the training corpus.

What opting out does not — the limitations

- It does not undo prior use.Content and data used before you opt out remain part of whatever models they’ve been ingested into.

- It does not affect non-content AI models (e.g. models for ranking, recommendations, security) — for those, you may need to submit a formal objection via LinkedIn’s Data Processing Objection form.

- Other people’s posts may reference or reuse your content (or repost/cite it), so your content could still indirectly influence training.

- Some information may still be used under broader “legitimate interest” legal bases, especially in regions with data protection regimes.

In short: opting out gives you forward protection; it does not cause data to disappear from already built or ongoing AI models.

4. How to Opt Out — A Step-by-Step Guide

Here’s how to switch yourself off from LinkedIn’s AI training (for generative AI) once the new terms kick in on November 3:

- Sign in to LinkedIn and go to Me → Settings & Privacy

- In the left menu, click Data Privacy

- Look for “Data for Generative AI Improvement”(or “How LinkedIn uses your data”)

- Toggle off “Use my data for training content-creating AI models”

- (Optional) Submit a Data Processing Objection request (via LinkedIn’s form) if you want to object more broadly to AI processing beyond content generation.

It’s a relatively simple toggle. But the key is doing it before your data begins flowing into the AI pipelines (or as early as possible). Shortcut straight to the setting is: https://www.linkedin.com/mypreferences/d/settings/data-for-ai-improvement

Because LinkedIn is turning the setting on by default, many users may never notice until the change happens. That’s why awareness is critical.

If you miss the deadline, it’s still possible to opt out — just remember that past data won’t be removed.

5. Tradeoffs, Risks & Reactions

Why LinkedIn is doing this (the upside side)

From LinkedIn’s perspective, using actual member data and content to train AI models can:

- Improve the quality, relevance, and accuracy of AI-generated suggestions (draft posts, replies, messages).

- Help the platform offer more intelligent features — rewriting, auto-generation, content enhancement.

- Tie better its AI features to real user content — making things feel more grounded in professional discourse.

- Fuel more precise ad targeting (with data sharing to Microsoft) — potentially increasing ad revenue.

- Enable LinkedIn/Microsoft affiliate synergies (data, ad signals) to compete more aggressively in the AI space.

In essence: richer data yields better AI, which enhances product and business value.

Concerns, criticisms, and risks

- Privacy & consent concernsAutomatically opting users in by default — rather than asking explicit opt-in consent — can feel intrusive. Critics argue people may not notice the change.

- Control & transparencySome users may find the settings obscure or confusing, especially around how “opt out” interacts with other AI uses or data sharing. Also, how LinkedIn redacts or anonymizes data is partly a “black box.”

- Legal and regulatory scrutinyIn jurisdictions with strong data protection laws (e.g. GDPR in EU, UK), regulators may examine whether LinkedIn’s “legitimate interest” justification is sufficient for automatically using content to train AI.

- Reputational risk & backlash Users may balk at seeing their professional or career content used as “training fuel” for AI — especially content that was meant for peer readers, not to generate more machine content.

- Value extraction vs creator control If your original content is used to train generative models that produce derivative content, questions arise around attribution, derivative works, and whether value accrues to content creators.

- Irreversibility of data usage Because prior data usage cannot be undone, if sensitive content was published earlier (e.g. insights, strategy, sensitive commentary), it could already be baked into training sets.

6. What You Can Do — Proactively

Here are steps you can take (beyond just toggling off the AI setting) to better protect your content and guard your digital presence:

Audit your existing content

- Review your past posts, articles, comments — do you have anything you’d rather not have used as AI training data?

- Consider editing, archiving, or deleting particularly sensitive or revealing content.

- Clean up excessive detail in your profile (if it’s not essential) — especially information you wouldn’t want AI models to ingrain.

Adjust privacy settings

- Ensure your profile visibility settings are appropriate.

- Review the visibility of posts (public vs connections only).

- Limit what you include in your profile or experience that feels overly personal.

Stay up to date with LinkedIn’s notices

- Monitor LinkedIn’s “Help” or “Privacy” pages — they will likely provide region-specific updates. LinkedIn Help: New Terms Page

- Watch for communications or alerts from LinkedIn about the transition.

Use alternative platforms or distribution

- If you produce sensitive, highly original content, consider hosting it on a personal blog or a platform where you have more direct control of the terms.

- Use Creative Commons licenses or explicit disclaimers when sharing content, if you wish to control derivative use.

Be vocal and demand accountability

- Engage in discussions, comment publicly about this change (as you’re doing – do add ypur comments below).

- Encourage LinkedIn to maintain transparency around how data is anonymized, filtered, or used.

- Follow regulatory and policy debates around AI, content rights, and platform accountability.

Final Thoughts

This policy change from LinkedIn represents an important moment where user content and AI development intersect more explicitly. As a professional network, LinkedIn is now asking its users to partly unlock their “career voice” to train the AI that will generate professional content. But it’s also giving you a choice — provided you act.

If you post on LinkedIn regularly, or rely on it for thought leadership, reputation, or personal branding, my advice is: don’t wait. As soon as the new terms are live (or shortly before), toggle off that generative AI setting. Audit your content. Decide what you want publicly searchable vs private.

In the broader evolution of AI, policies like this are testing the balance between innovation and individual data rights. Opting out doesn’t mean you reject AI entirely — it means asserting agency over what becomes training material. And your voice, your insights, your expertise — they deserve that control.